Create an Azure Key Vault Resource – Keeping Data Safe and Secure-2

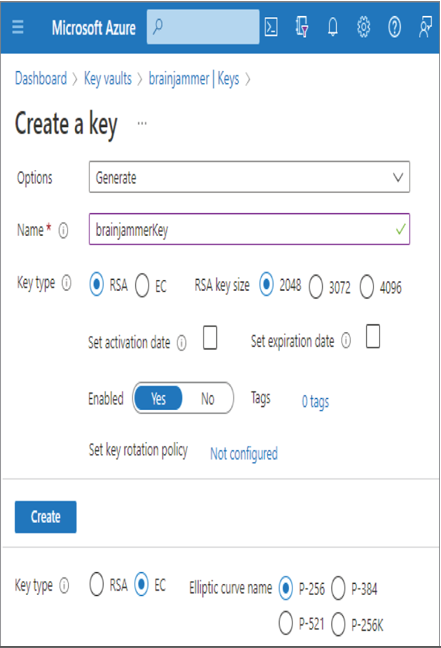

The Networking tab enables you to configure a private endpoint or the binding to a virtual network (VNet). The provisioning and binding of Azure resources to a VNet and private endpoints are discussed and performed in a later section. After the provisioning the key vault, you created a key, secret, and a certificate. The keys in an Azure key vault refer to those used in asymmetric/public key cryptography. As shown in Figure 8.2, two types of keys are available with Standard tier: Rivest‐Shamir‐Adleman (RSA) and elliptic curve (EC). These keys are used to encrypt and decrypt data (i.e., public‐key cryptography). Both keys are asymmetric, which means they both have a private and public key. The private key must be protected, as any client can use the public key to encrypt, but only the private key can decrypt it. Each of these keys has multiple options for the strength of encryption. For example, RSA has 2,048, 3,072, and 4,096 bits. The higher the number, the higher the level of security. High RSA numbers have caveats concerning speed and compatibility. The higher level of encryption requires more time to decrypt, and not all platforms can comply with such high levels of encryption. Therefore, you need to consider which level of security is best for your use case and security compliance requirements.

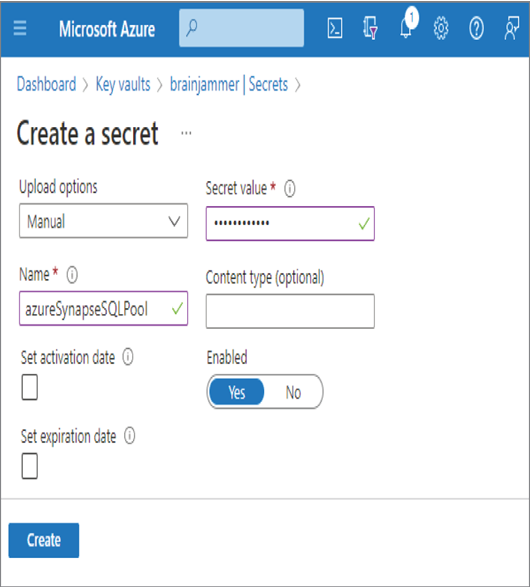

A secret is something like a password, connection string, or any text up to 25k bytes that needs protection. Connection strings and passwords commonly are stored in configuration files or hard coded into application code. This is not secure, because anyone who has access to the code or the server hosting the configuration file has access to the credentials and therefore the resources protected by them. Instead of that approach, applications can be coded to retrieve the secret from a key vault, then use it for making the required connections. In a production environment, the secret can be authenticated against a managed identity or service principal when requested.

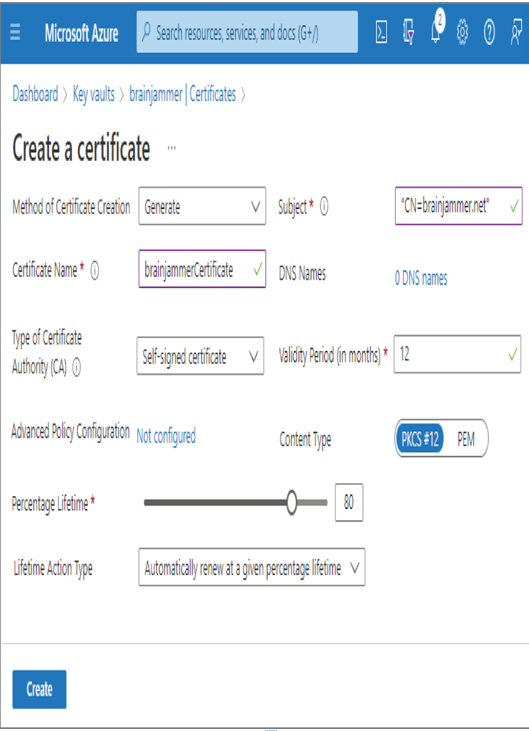

Secrets stored in a key vault are encrypted when added and decrypted when retrieved. The certificate support in Azure Key Vault provides the management of x509 certificates. If you have ever worked on an Internet application that uses the HTTP protocol, you have likely used an x509 certificate. When this type of certificate is applied to that protocol, it secures communication between the entities engaged in the conversation. To employ the certificate, you must use HTTPS, and this is commonly referred to as Transport Layer Security (TLS). In addition to securing communication over the Internet, certificates can also be used for authentication and for signing software. Consider the certificate you created in Exercise 8.1. When you click the certificate, you will notice it has a Certificate Version that resembles a GUID but without the dashes. Associated to that Certificate Version is a Certificate Identifier, which is a URL that gives you access to the certificate details. When you enter the following command using the Azure CLI, you will see information like the base‐64–encoded certificate, the link to the private key, and the link to the public key, similar to that shown in Figure 8.6.

FIGURE 8.6 Azure Key Vault x509 certificate details

The link to the private key and public keys can be used to retrieve the details in a similar fashion. The first Azure CLI cmdlet retrieves the private key of the x509 certificate, which is identified by the kid attribute. The second retrieves the public key using the endpoint identified by the sid attribute.

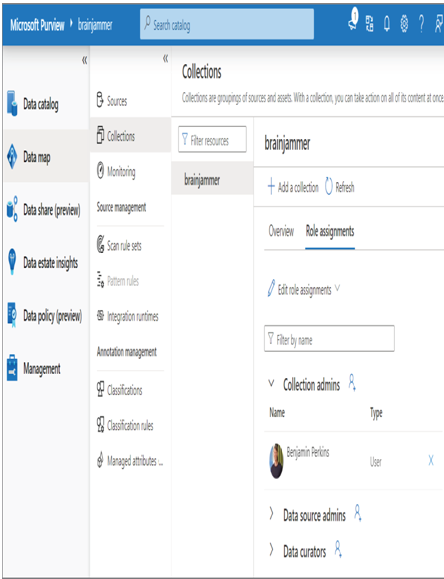

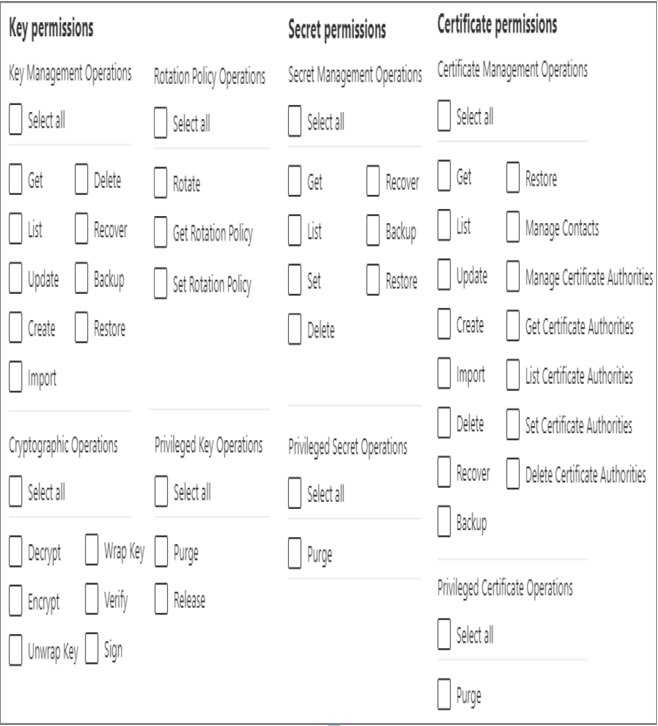

The ability to list, get, and use keys, secrets, and certificates is controlled by the permissions you set up while creating the key vault. Put some thought into who and what gets which kind of permissions to these resources.