Design Data Encryption for Data at Rest and in Transit – Keeping Data Safe and Secure

Encryption is a very scientific, mathematics‐heavy concept. The internals are outside the scope of this book, but in simple terms when data is encrypted, it looks like a bunch of scrambled letters and numbers that are of no value. The following is an example of the word csharpguitar using the key created in Exercise 8.1:

p0syrFCPufrCr+9dN7krpFe7wuwIeVwQNFtySX0qaX3UcqzlRifuNdnaxiTu1XgZoKwKmeu6LTfrH

rGQHq4lDClbo/KoqjgSm+0d0Ap/y2HR34TFgoxTeN0KVCoVKAtu35jZ52xeZgj1eYZ9dww2n6psGG

nMRlux/z3ZDvm4qlvrv55eAoSawbCGWOql3mhdfHFZZxLBCN2eZzvBpaTSNaramME54ELMr6ScIJI

ITq6XJYTFH8BGvPaqhfTTO4MbizwenpijIFZvdn3bzQGbnPElht0j+EQ7aLvWOOyzJjlKcR8MN4jO

oYNULCZTBi/BVvlhYpUsKxxN+YW27POMAw==

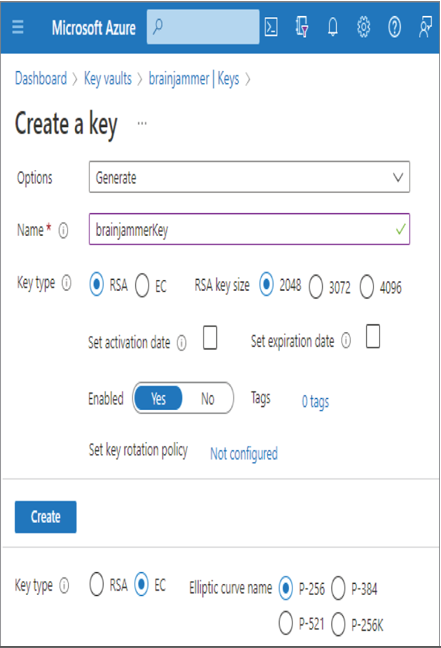

There is no realistic method for anyone or any computer to revert that set of characters back into the original word. That is the power of encryption implemented using public and private keys. Only by having access to the private key can one make sense of that character sequence. The only means for decryption is to use the az keyvault key decrypt Azure CLI cmdlet or a REST API that has access to the private key. This leads well into two very important security concepts that pertain greatly to the storage of data on Azure: encryption‐at‐rest and encryption‐in‐transit.

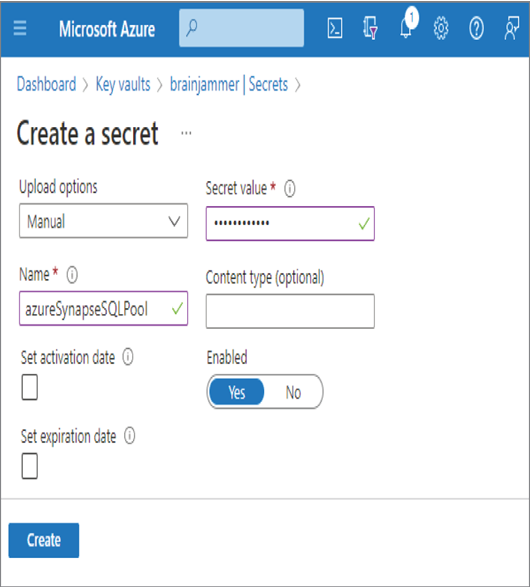

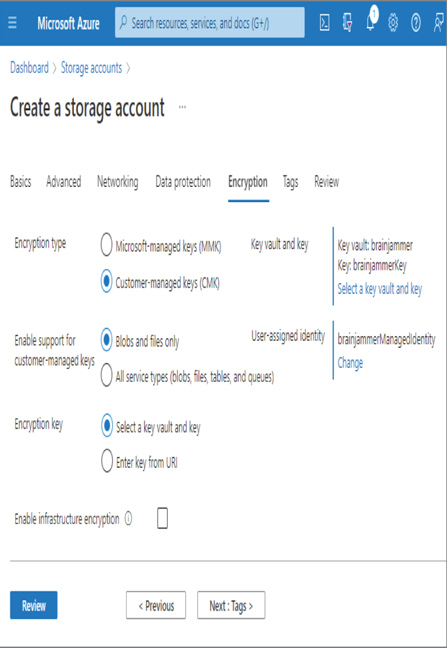

Data stored in an Azure storage account is encrypted by default. No action is required by you to encrypt your data that is stored in a container. It is encrypted even if it is not used, which is where the name encryption‐at‐rest comes from. The data is simply stored, idle, doing nothing, but is secured by encryption. This kind of protection is intended to defend against a bad actor getting access to the physical hard drive that contains data. When the bad actor attempts to access the data, they will see only the scrambled characters. If they do not have the associated keys, which should only be accessible in a key vault, there is no chance of decrypting the data. Therefore, your data is safe, even when it is resting and not being used. Back in Exercise 3.1 where you created an Azure storage account and an ADLS container, there was a tab named Encryption. That tab includes two radio buttons, as shown in Figure 8.12. The default was to use a Microsoft‐Managed Key (MMK) for the encryption‐at‐rest operation; the other optiom is named Customer‐Managed Key (CMK). If you select CMK, then you can reference a key you have created in an Azure Key Vault to use as a default encryption key.

Storage account encryption is available for customers who need the maximum amount of security due to compliance or regulations. Also notice the Enable Infrastructure Encryption check box. When this box is selected, the data stored in the account is doubly encrypted. Double encryption is available for both data at rest and data in transit. Instead of being encrypted with just one key, the data is encrypted with two separate keys, the second key being implemented at the infrastructure level. This is done for scenarios where one of the encryption keys or algorithms is compromised. When Enable Infrastructure Encryption is selected and one of the encryption keys is compromised, your data is still encrypted with 256‐bit AES encryption by the other key. The data remains safe in this scenario. Another common encryption technology on the Azure platform that is targeted towards databases is Transparent Data Encryption (TDE). TDE protects data at rest on SQL Azure databases, Azure SQL data warehouses, and Azure Synapse Analytics SQL pools. The entire database, data files, and database backups are encrypted using an AES encryption algorithm by default, but like Azure Storage, the encryption key can be managed by the customer or by Microsoft and stored in an Azure key vault.

FIGURE 8.12 Azure storage account encryption type

The opposite of resting is active, which can be inferred to data being retrieved from some remote consumer. As the data moves from the location where it is stored to the consumer, the data can be vulnerable to traffic capture. This is where the concept of encryption‐in‐transit comes into scope. You encrypt data in transit by using TLS 1.2, which is currently the most secure and widely supported version. As previously mentioned, TLS is achieved by using an x509 certificate in combination with the HTTP protocol. Consider the following common Azure product endpoints:

- https://accountName.blob.core.windows.net/container

- https://accountName.dfs.core.windows.net

- https://web.azuresynapse.net

- https://workspaceName.dev.azuresynapse.net

- https://accountName.documents.azure.com:443

- https://vaultName.vault.azure.net

- https://adbEndpoint.azuredatabricks.net

In all cases, the transfer of data happens using HTTPS, meaning the data is encrypted while in transit between the service that hosts it and the consumer who has authorization to retrieve it. When working with Linux, the protocol to use is secure shell (SSH), which ensures the encryption of data in transit; HTTPS is also a supported protocol. An additional encryption concept should be mentioned here: encryption‐in‐use. This concept is implemented using a feature named Always Encrypted and is focused on the protection of sensitive data stored in specific columns of a database. Identification numbers, credit card numbers, PII, and need‐to‐know data are examples of data that typically resides in the columns of a database. This kind of encryption, which is handled client‐side, is intended to prevent DBAs or administrators from viewing sensitive information when there is no business justification to do so.

The final topic to discuss in the section has to do with the WITH ENCRYPTION SQL statement. In Exercise 2.3 you created a view using a statement similar to the following:

CREATE VIEW [views].[PowThetaClassicalMusic]

In Exercise 5.1 you created a stored procedure using the following command:

CREATE PROCEDURE brainwaves.uspCreateAndPopulateFactReading

Each of those statements can be used by placing the WITH ENCRYPTION SQL directory after the CREATE command, like the following:

CREATE VIEW [views].[PowThetaClassicalMusic] WITH ENCRYPTION

CREATE PROCEDURE brainwaves.uspCreateAndPopulateFactReading WITH ENCRYPTION

If you then attempt to view the text for the stored procedure, you will not see it; instead, you will see a message explaining that it is encrypted. Using the WITH ENCRYPTION statement provides a relatively low level of security. It is relatively easy to decrypt for technically savvy individuals; however, it is quick and simple to implement, making it worthy of consideration.

Azure Role‐Based Access Control Design for Data Privacy Handle Interruptions Implement Data Masking Microsoft DP-203