Azure Policy – Keeping Data Safe and Secure

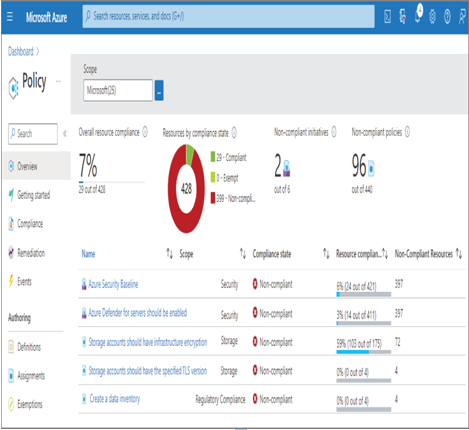

One of the first experiences with a policy in this book came in Exercise 4.5, where you implemented data archiving. You used a lifecycle management policy and applied it directly to an Azure storage account. The policy, which you can view in the Chapter04/Ch04Ex04 directory on GitHub, identifies when blobs should be moved to Cold or Archived tier and when the data should be purged. Azure Policy, on the other hand, enables an Azure administrator to define and assign policies at the Subscription and/or Management Group level. Figure 8.9 illustrates how the Azure Policy Overview blade may look.

FIGURE 8.9 The Azure Policy Overview blade

Each policy shown in Figure 8.9 links to the policy definition constructed using a JSON document, as follows. You can also assign the policy to the Subscription and a Resource group.

The policy rule applies to all resources with an ARM resource ID of Microsoft.Storage and applies to the minimumTlsVersion attribute. When this policy is applied to the Subscription, TLS 1.2 will be the default value when provisioned, but the policy will also allow TLS 1.1; however, TLS 1.0 is not allowed. The provisioning of an Azure storage account that uses TLS 1.0 would fail because of this policy.

Design a Data Retention Policy

Data discovery and classification are required for determining the amount of time you need to retain your data. Some regulations require maximum and/or minimum data retention timelines, depending on the kind of data, which means you need to know what your data is before designing the retention policy. The policy needs to include not only your live, production‐ready data but also backups, snapshots, and archived data. You can achieve this discovery and classification using what was covered in the previous few sections of this chapter. You might recall the mention of Exercise 4.5, where you created a lifecycle management policy that included data archiving logic. The concept and approach are the same here. The scenario in Exercise 4.5 covered the movement and deletion of a blob based on the number of days it was last accessed. However, in this scenario the context is the removal of data based on a retention period. Consider the following policy example, which applies to the blobs and snapshots stored in Azure storage account containers. The policy states that the data is to be deleted after 90 days from the date of creation. This represents a retention period of 90 days.

When it comes to defining retention periods in relational databases like Azure SQL and Azure Synapse Analytics SQL pools, there are numerous creative approaches. One approach might be to add a column to each data row that contains a timestamp that identifies its creation date. You then can run a stored procedure executed from a cron scheduler or triggered using a Pipeline activity. As this additional information can be substantial when your datasets are large, you need to apply it only to datasets that are required to adhere to such policies. It is common to perform backups of relation databases, so remember that the backups, snapshots, and restore points need to be managed and bound to retention periods, as required. In the context of Azure Databricks, you are most commonly working with files or delta tables. Files contain metadata that identifies the creation and last modified date, which can be referenced and used for managing their retention. Delta tables can also include a column for the data’s creation date; this column is used for the management of data retention. When working with delta tables, you can use a vacuum to remove backed up data files. Any data retention policy you require in that workspace should include the execution of the vacuum command.

A final subject that should be covered in the context of data retention is something called time‐based retention policies for immutable blob data. “Immutable” means that once a blob is created, it can be read but not modified or deleted. This is often referred to as a write once, read many (WORM) tactic. The use case for such a feature is to store critical data and protect it against removal or modification. Numerous regulatory and compliance laws require documents to be stored for a given amount of time in their original state.